A truly amazing gift that my dad made; a 3D woodcut of the Practical Computer logo. And the color matching is perfect too!

It deserves to be right under @jennyrjohnsonart.bsky.social’s amazing work.

The Seinfeld Transcoding Problem

Over the past 2 months, I’ve been working on digitizing our physical media library for easier access. Part of this project was digitizing and transcoding the government-issued complete Seinfeld DVD collection. Which is weird in about 4 different ways.

Basically, it acted as a great lesson in:

- The importance of good source data, both in how you structure it and what data you have available to you

- When to pull the rip cord on automation (or, more importantly, recognizing the things that you did already automate)

- Try not to over-automate and recognize when a task is inefficient, but the process is efficient.

This is all over the place!

To start, the data on the disks for the early seasons is all over the place. So, if a disk is episodes 1-4, they aren’t stored sequentially. This goes against the grain of basically every other disk I’ve transcoded in this process.

What is an episode, anyway?

Seinfeld also throws multiple curveballs in its file structure. Notably, The Stranded is listed as Season 3, Episode 10, but was filmed as part of Season 2’s production schedule. So, it’s actually on the Season 1 & 2 box set.

Pop quiz: If an episode is a 2-parter, how should it be broken up as a file?

There are 2 schools of thought:

- It’s a contiguous story, so as a single video

- Broken up into distinct episodes, since that’s how it aired and is syndicated

Seinfeld takes both approaches, based on air date. In the same season, one two-parter is two distinct video files, while another is a single video. This is based on whether it aired on the same day, or was broken up over different days. All metadata sources break them up into distinct entries.

This would have thrown a loop for both bulk-renaming and computer vision identification, and I had to make a judgement call and come up with a modified naming schema for these cases. (eg: S4.E10-11).

Make sure you’ve got good external data

My first pass was to try to find a computer vision tool to solve this, because that’s kind of a perfect use case for computer vision. And I found a python script that extracts frames from a video, searches TMDB for episodes with snapshots that match the best, and renames the files.

A great workflow in theory, but I ran into the problem that plagues computer vision: sourcing. While TMDB does have a feature where it has an API for grabbing screenshots for an episode, their dataset for Seinfeld has maybe 2 screenshots per episode. So it’s not a strong enough dataset for automated identification. When I ran a test of an episode that I had correctly ID’d, it whiffed on it.

I’d also found some potential tools to match by subtitles, but was not able to get them to work (I’m pretty sure they’re one-off vibe coded projects that worked for someone in their specific case). Plus, they were inefficient: they wouldn’t look at the actual subtitles in the file, but would transcribe the audio, which would have slowed down the whole process and been much more resource intensive. I already have the source subtitles! They don’t need to like listen and get an imperfect transcript, I have the transcript! It would be a lot more efficient to compare text against text, so this didn’t inspire confidence in the project.

Even though these approaches failed, they did help in the final workflow

How I processed all these videos in a few hours

The final workflow ended up being:

-

GUI:

- Multiple windows of Windows Explorer:

- One for the tabs of raw videos from the DVDs

- One for the renamed, identified videos that will be transcoded

- A browser with TMDB and seinfind.com (which I’d found while looking for subtitle sources) open

- VLC in a small window in the corner

- Multiple windows of Windows Explorer:

- Workflow:

- Check the physical disk for the corresponding folder (Season 3, Disk 2) to get the episode range

- Bulk-rename the files, based on their “created at” timestamp

- Open each video, scrub for frames, scenarios, or identifying phrases

- Check TMDB/Seinfind with that information, get the match

- Rename the file, onto the next one

The importance of structuring data

This workflow was possible because rather than having 23 episodes in a season, or 180 episodes to work through, the data was broken into 5-6 episodes using a stable identifier: The disk they were from. As I was ripping each disk, I made sure that the files were put into directories and I made sure that the directories matched the the disc names. For example: “Season 4, Disk 3” and then everything inside that DVD would go in there for later processing.

Then, the problem of “oh, which season, which episode am I looking at?” was much more efficient because I’m only looking at those 4-6 episodes at a time. With the batch renamer, I could take a best-guess pass and sort from there. Essentially, I automated that part of the process with:

For this part of the operation, these are episodes 4 through 10.

After that, manual verification kicked in. When the episodes were out of order, I renamed the correct match with a suffix, then jumped to the incorrect episode and identified it.

Which, is just a manually implemented sorting algorithm. Rather than going through all 23 episodes, which would be really intensive for a slow or “differently efficient” CPU (my brain), I am breaking it up into chunks of six because it’s much easier to work in those chunks. And because those chunks will be sequential, I will get the ordered list of episodes.

Task inefficient, Process efficient

This endeavor a good example of when someone is task inefficient, but the overall process is efficient. If you said:

Me, the human, is going to open each video, scrub through it, identify the episode, and sort 180 episodes of television

That feels like a horribly inefficient and daunting process. And at the task level, its inefficient! However, at the process level, it is more efficient because of the work I did to structure the source data, the lessons & tools I found along the way, leveraging existing automations, and the nature of probability.

Having good source data and preparing it to be as workable and easy as possible despite its imperfections is what unlocked the sorting algorithm.

The next part was knowing my tools well enough to automate the things where it makes sense and then using human discernment to finish the rest of the job. Extracting the DVD data, using a batch renamer, and auto-organizing files into disk-based directories are all things I’ve already done to automate the process. TMDB and Seinfind ended up being crucial resources for pattern matching. And because I’m comfortable with my window manager and keyboard shortcuts, I built out a whole bespoke GUI just for this process, with zero code or extra work!

At the process level this approach ended up being much more efficient, because rather than trying to find all the edge cases and particulars of this unstructured data (a series of flat files) and translate into software or prompts, I wrote the program on the fly in my brain. I identified the patterns, used them to build out a structure, and got into a flow state.

Also, I’m only doing this once. I’m not regularly transcoding & organizing nine 23 episode seasons of a beloved broadcast television show that did not logically organize its data on disk. I mean, if I end up doing The West Wing, Deep Space 9, or some other giant set it might make sense to expand these scripts to make the process faster. But, I spent 3 hours trying to get them up-and-running, and the manual work for Seinfeld took 3-4 total.

Computer vision and transcription have very useful applications, but they require strong datasets. It also requires understanding the data, trying to make the best sense of it, and human discernment.

I feel like in the quest to overautomate everything or write custom software for everything, you end up creating more inefficiencies and wasting more time, where a human can get into a flow state and better adapt to the complexities and problems that certain datasets present.

Replaced the battery on my iPod and only busted up the case a little. These buggers are a nightmare.

After finishing The Rose Field, I am even more convinced that lauded writers need editors trained on them like snipers at all times. 🙃

This always bodes well as a Linear ticket (doing major Little CRM refactoring to remove cruft from prototypes)

What have we *really* gained in the past (almost) decade of tech?

This is incomplete, I’m just trying to get some version of it out there

After years of having it in the backlog, I finally watched the livestream of this Academy event about the parallels between the filmmaking of the original Star Wars and Rogue One. www.oscars.org/events/ga…

Along with seeing some of the tricks used in the original film, and ways those had direct translations in Rogue One (the coolest being that Rogue One did end up creating virtual model kits that could explode “realistically” as if they were kitbashed); the coolest thing by far was seeing the virtual camera setup created for Gareth Edwards to block out CG shots cohesively.

It was all assembled with off-the-shelf 2016 parts (an iPad, some game controller, and an HTC Vive taped on the back!) That, combined with an actual understanding of filmmaking, made a film shine in ways that CG scenes hadn’t fully nailed prior. And maybe since? Fully CG scenes still feel too abstract.

So, it’s been 9 years since Rogue One; even longer for the production schedule. And from what I’ve seen tech just…hasn’t significantly advanced in terms of allowing that artistic expression. Not to mention, there are actual physical limitations to what we can perceive. At some point, the difference between 4K and 5K becomes…moot? At the very least, not worth the effort IMO. Arguably, things have gotten worse because we stopped looping back for refinements.

You can see versions of this with the limitations of Volume, an iteration of ILM’s StageCraft that’s been heavily used for a bunch of Star Wars projects. It’s an admittedly cool tool for hyrbid sets, but it’s jarring once you notice that most Star Wars shows shot in The Volume are blocked as if they’re stage plays.

The point being: The Volume is a specific tool, designed to solve a specific set of problems. Just like how the duct-taped virtual camera allowed for quick, cheap blocking of fully CG shots. And The Last Jedi’s use of physical models copy/pasted into CG shots ground the scene.

A prevailing mantra of tech for the past almost-decade is the idea of an omnitool: a small subset of ~tools~ services that act as a panacea. But that’s not how collective endeavors work! The difficulty, challenge, and joy is finding the right makeup of existing tools & some new techniques to push a project to completion. Then, going back and figuring out/teaching that new technique!

No matter what AI Chuds try to tell us, there’s always value in doing the work itself. There’s still so much ground to cover with our existing tools & approaches. Working faster/cheaper/more vibsey isn’t going to make something better, especially with how rickety our foundations currently are.

How much stock do you put into Cumulative Layout Shift? I’ve got a site that AFAICS is pretty damn stable, but Lighthouse has its CLS marked at ~0.3. Not to mention the oscillations it has on repeat runs

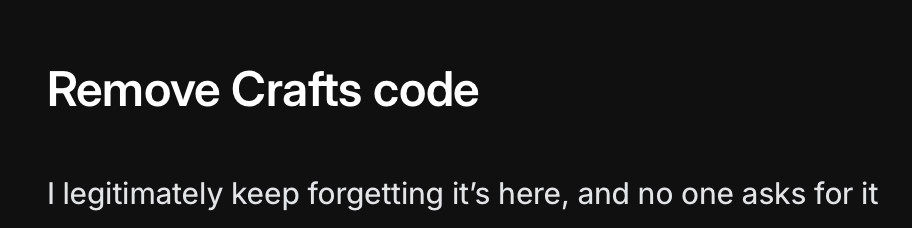

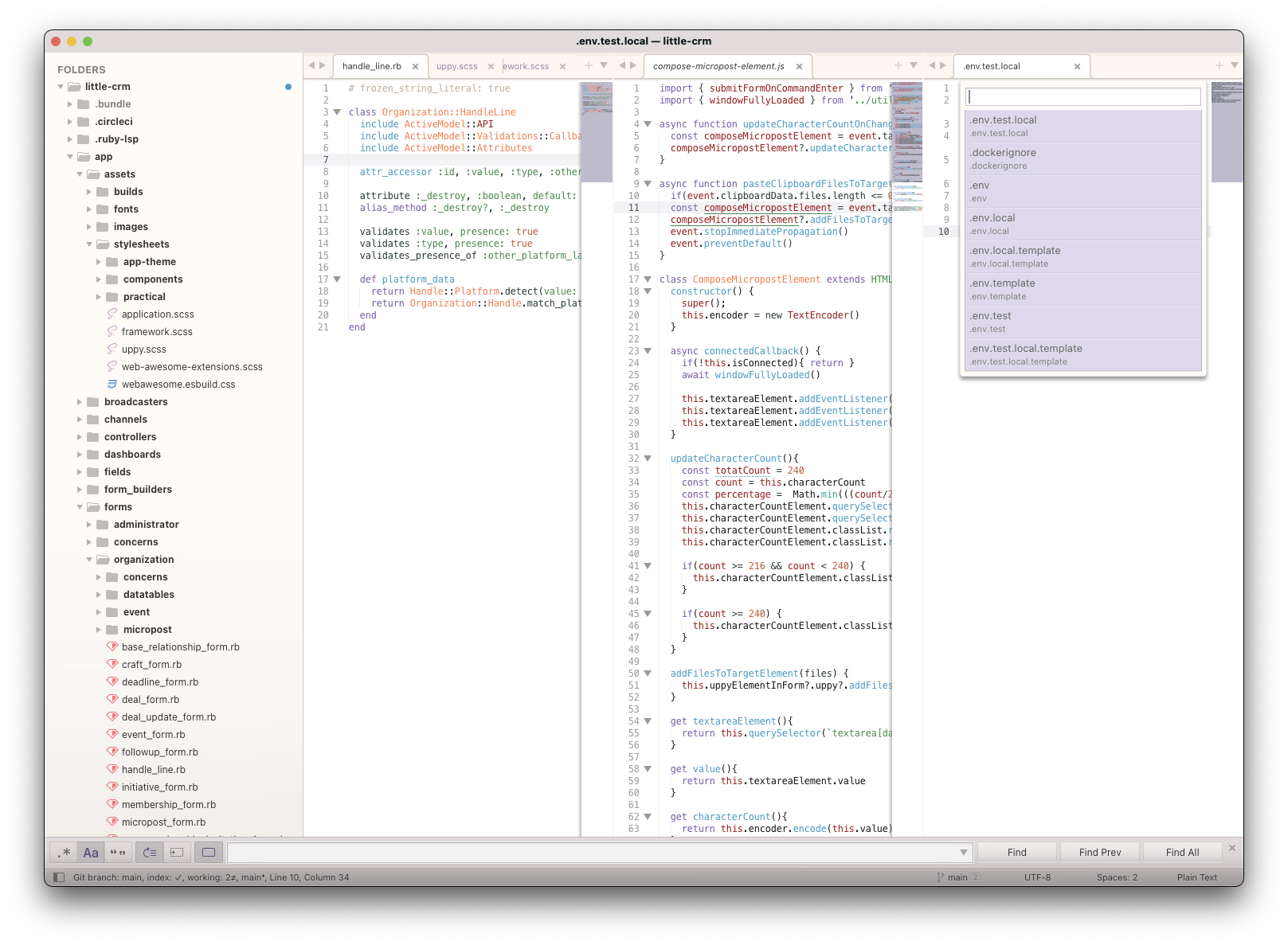

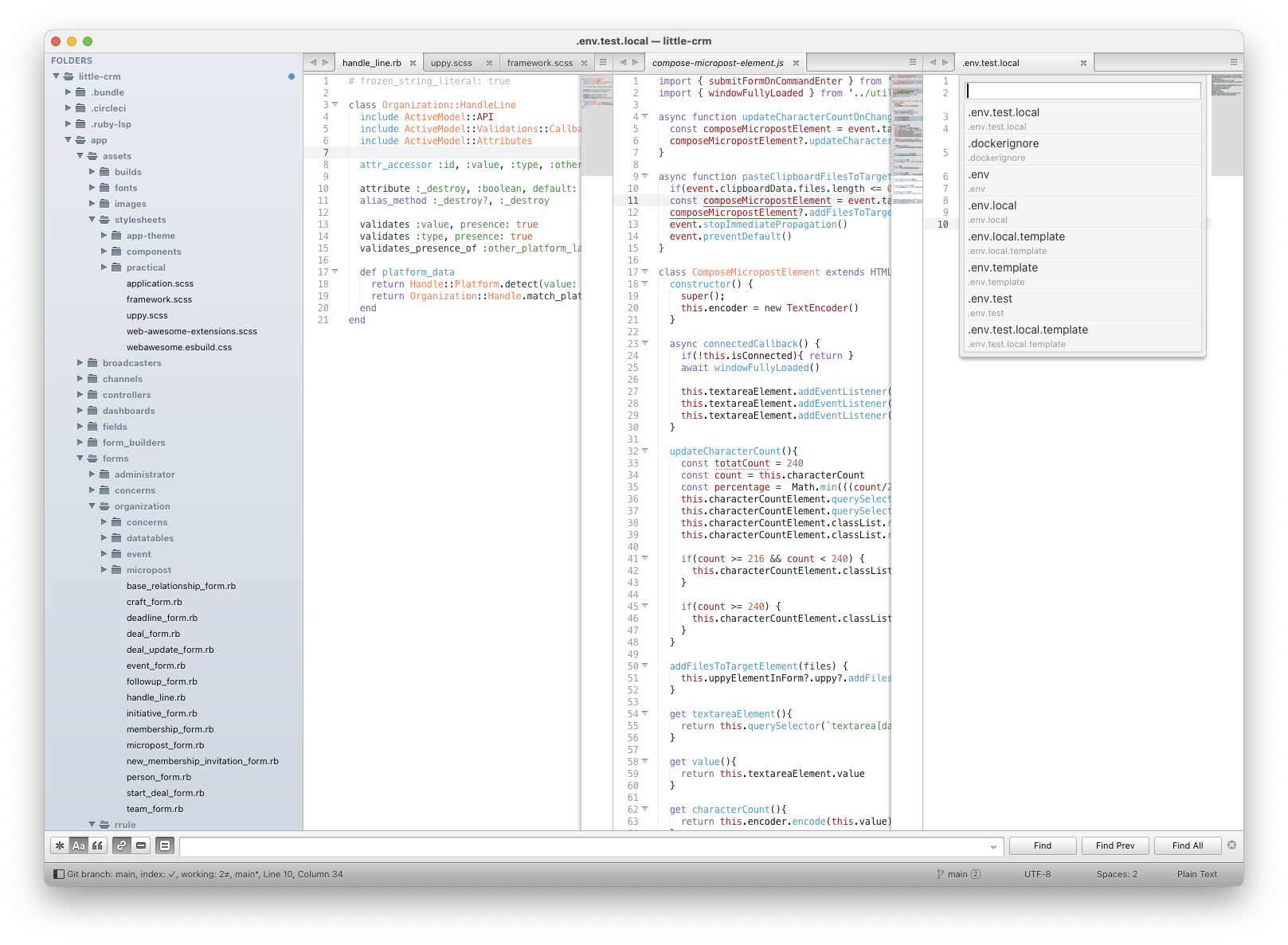

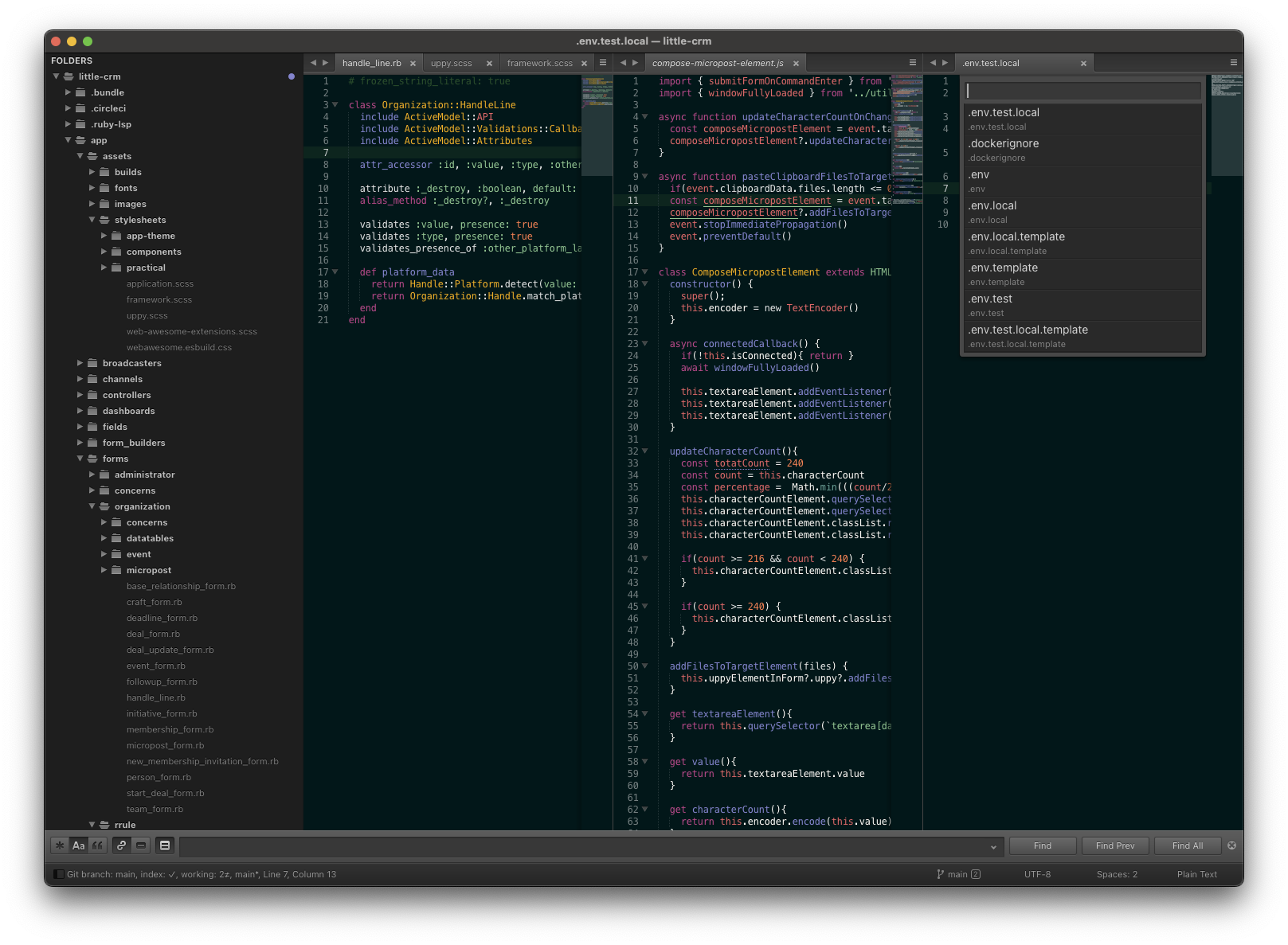

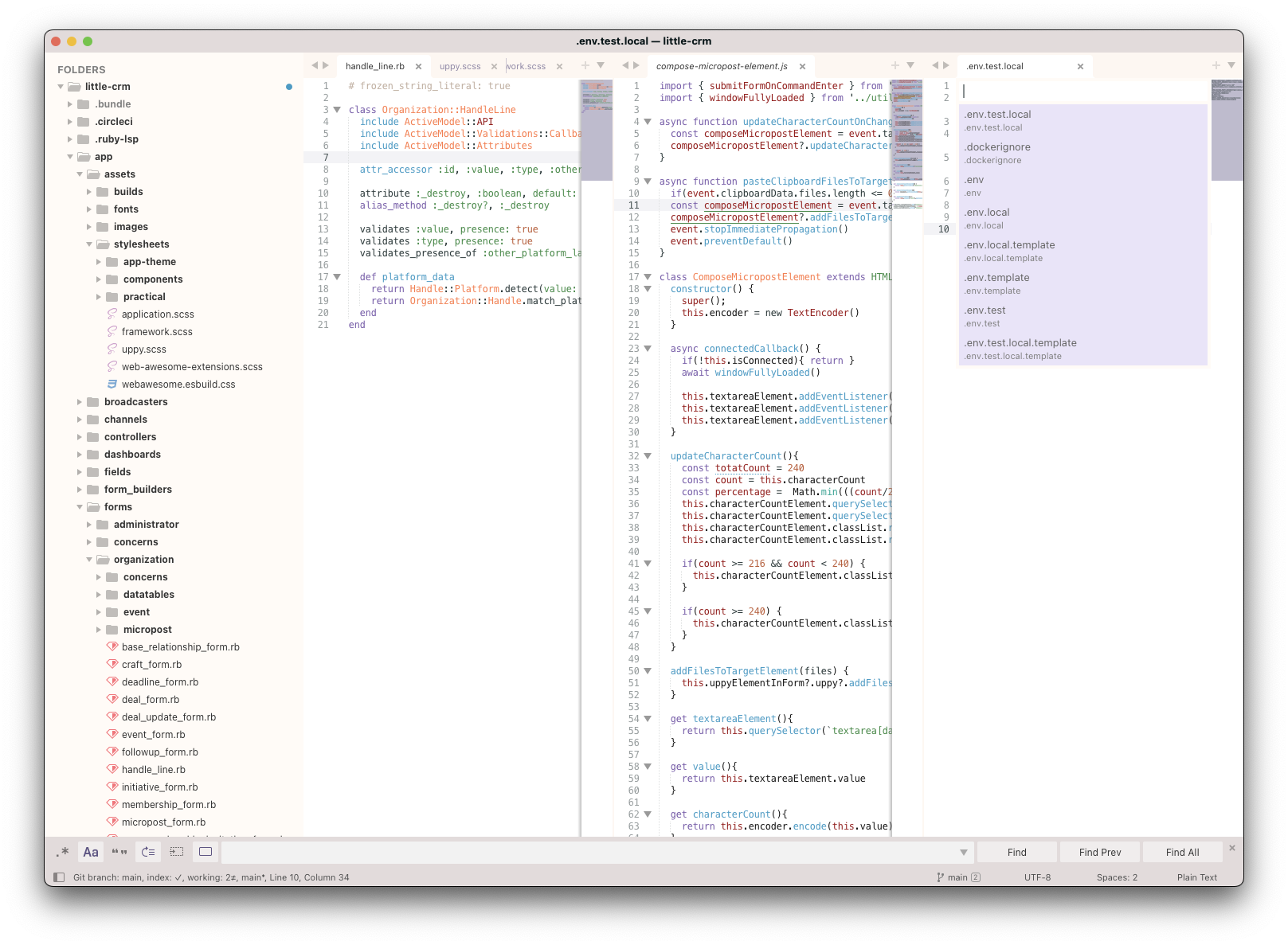

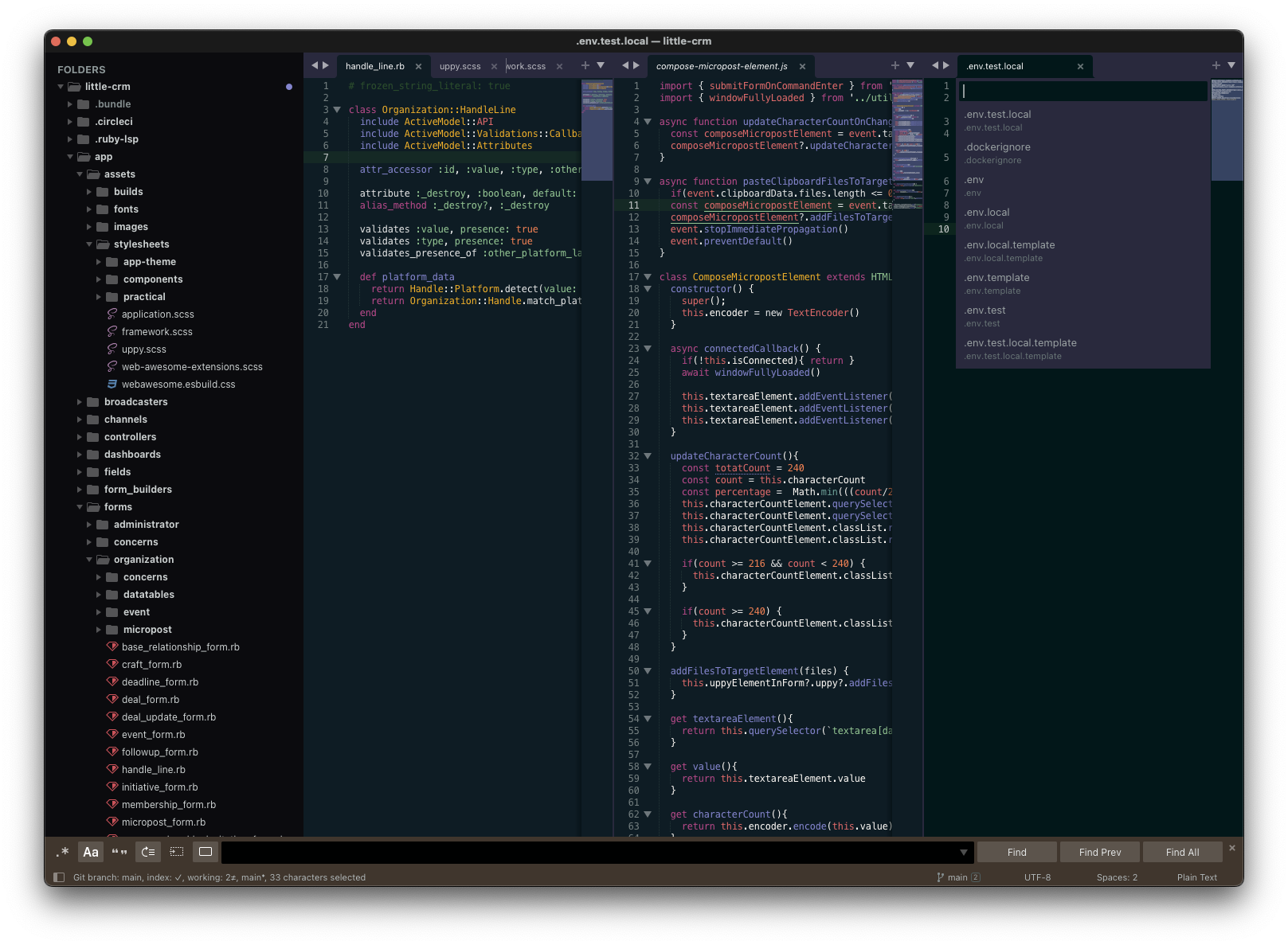

The first pass at Practical Adaptive, a hybrid of Soda + Adaptive for Sublime

Soda Light/Dark

Adaptive Light/Dark

“How’s today been?!”

From a junior interested in Ruby:

I’ve recently gone down a rabbit hole, do you know who DHH is? That’s a dumb question, I assume you do Does the Rails community generally accept him?

What if I told you this was like…the 3rd longest/draining conversation I’ve had today

I really want to be one of those cool kids with a fancy programming font. But I can’t really beat Menlo, Regular, 12pt in terms of compactness across line height & width; while still being readable

Inspired by @chaelcodes.bsky.social ’s @xoruby.com talk, and a love of Base16 as a color schema; I’ve put together a repo you can use to start making your own color schemes using the Tinted Theming project’s tooling: github.com/practical…

You know I had to test-drive ReActionView today. Marco & Co. are beyond cooking with gas with ReActionView. And this is only 0.1.0

Bonkers!

ABSOLUTELY UNHINGED

Hey, I just met you, and this is crazy But how about you share your number & I will give you a call before we push it to a meeting :) maybe

![The body of an email:&10;&10;Hello Thomas,&10;&10;Hats off on landing the role as Chief Technology Officer at Punchpass.&10;&10;Have you considered enhancing your marketing team with additional support? We provide bespoke marketing services with no hour caps, designed for companies like yours.&10;&10;Would you be interested in a quick discussion for 17 minutes on how we can help [REDACTED] reduce costs in your marketing activities?&10;&10;On a side note, how about you share your number & I will give you call before we push it to a meeting :)&10;&10;Gabriel Taylor&10;Dingus & Zazzy](https://cdn.uploads.micro.blog/51932/2025/unhinged-cold-email.jpg)

I’m speaking at XO Ruby in Atlanta on September 13 about a topic near-and-dear to me: making sure we’ve got the next generation of Rubyists! You should come hang out! The lineup looks amazing

![A version of the "My body is a machine" meme, but with "My [Safari Icon] is a machine that turns Dispatched Events into Handled Events"](https://cdn.uploads.micro.blog/51932/2025/abd09b.jpg)